Building

Multitiered Client/Server Apps with Visual Basic 4.0 Enterprise

Edition, Part II

by Jenny Notestein

Jenny Notestein is an

enterprise development consultant in the New York financial

district. She can be reached at notestein@msn.com.

This is the second

part of a three-part series on enterprise development in the

corporate world using Microsoft® Visual Basicģ 4.0

Enterprise Edition. In this series, I’m examining

what’s involved in building a robust, multitiered

client/server application for a corporate client. The

application I’m building is a data-warehouse-type

decision support reporting system for line managers that is

designed to use the features of Visual Basic, ActiveX™

technology, and BackOffice™ to provide an elegant

solution to a real-world business need.

In Part I, "Building

Multitiered Client/Server Apps with Visual Basic 4.0

Enterprise Edition" (MSJ, November 1996), I

looked at developing the architecture of a 32-bit

application, including the basic properties and methods of

its components (it’s referred to in this article as the

Reporting System API). This month I’ll focus on the

implementation phase, doing some serious development of the

application’s Request Broker, virtual data object, and

Report Builder components. I’ll cover implementation

issues such as security, pool management, optimization, and

internationalization. Finally, I’ll build support for a

Web browser viewer that works with Microsoft Internet

In-formation Server (IIS) to display a finished report as an

HTML page.

In Part III of this series

I’ll wrap up the implementation and cover rollout issues

such as quality assurance, ongoing maintenance, and

enhancement requests. After I finish the application,

I’ll take a look at hot-and-fresh Visual Basic 5.0 for

dessert.

In

Our Last Episode…

Before I jump into

implementing my application, I’ll briefly review its

structure. The system is a generic data-warehouse-type

reporting facility designed as a decision support tool for

business line managers. It’s written in 32-bit Visual

Basic 4.0 Enterprise Edition and runs on Windows NT® 4.0

Workstation for the user service component, and Windows NT

4.0 Advanced Server for the business service components. My

reporting system extracts data and compiles useful reports

from a wide variety of existing DBMSs commonly found in

corporate enterprises.

The standard personnel-type

reports that are used as examples in this article are just

that—simple examples. This system is designed with a

flexible architecture; components can be built to produce

virtually any business report desired. I chose the Employee

Info example because it’s a great fit for the demo data

that I lifted from the Northwind database that ships with

Microsoft Access.

The basic user service

component is called the Reporter, which runs locally on

users’ desktops. It includes a wizard interface through

which the user chooses a report, selects a preferred format

for viewing the report, and enters any required parameters.

The data used to populate the wizard pages and the

information the user enters on those pages are handled by the

primary business service component—the Request

Broker—which is an ActiveX server.

The Request Broker passes the

information collected through the wizard to a specific

virtual data object business service component. A separate

virtual data object ActiveX server will be prebuilt for each

of the reports available to the user, although the API for

every virtual data object remains the same. Each virtual data

object will connect to the appropriate data services and

construct the query or queries necessary to build the

resultset from which the desired report can be built. The

resultset is then cached in an SQL Server™ 6.5 business

service component. The virtual data object passes the

location of this data to the Request Broker.

The Request Broker passes the

location of the resultset to a specific Report Builder

business service component. A separate Report Builder ActiveX

server will be prebuilt to format a cached resultset into one

of the formats offered to the user, such as a Microsoft Excel

spreadsheet, a comma-delimited file, or an HTML page, again

using the Reporting System API. The Report Builder deposits

the completed report in the data cache and passes its

location to the Request Broker. The Request Broker passes the

location of the completed report to the Reporter running on

the user’s workstation, which displays the report to the

user in a Viewer.

Report

Build Message Object, Unstubbed

In Part I, I talked about the

application employing a report build message object, which

contains the two-way messages used to build the wizard pages

and collect the data entered in them by the user. The

wizard-building data was previously stubbed. This stubbed

information has now been replaced with real, live data.

Since two of my goals are to

build a thin client and to take advantage of the Services

model for distributed application design, the data for the

report build message object has been neatly stored in a

database out on the business service SQL Server 6.5

component. Tables in this database provide the originating

content for the messages, which comes down from the server on

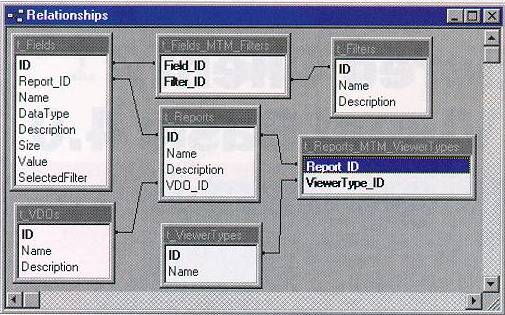

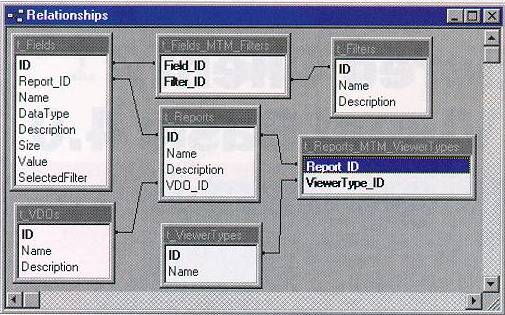

a per-report basis each time the application is run. Figure

1 shows an entity-relationship diagram of the database

design.

Figure

1 Entity relationships

The major entity for my

reporting system is, of course, the report. The reports table

contains the name of each report, its description, and the ID

of the virtual data object that will retrieve the data for

the report. The fields table contains the fields that are

presented to the user through the wizard interface, from

which the user chooses the filtering values that are built

into the Where clause of the virtual data object’s SQL

statement. Each virtual data object is built to contain the

projection clause of the Select statement used to retrieve

the necessary resultset or sets from databases across the

enterprise.

Down the road, I might want to

add a column to the fields table called UI_Name that includes

a friendly name more suitable for displaying in the user

interface than the counterintuitive names commonly found in

databases—particularly in legacy systems—where the

attribute names are typically abbreviated, concatenated,

truncated, oddly prefixed, and otherwise mutilated. At

present, I’m simply aliasing the names in the Select

statements against this table.

New to this version of my

application are the viewer type components of the report

build message object, which contain the information on how

the report is presented to the user. Each report has a number

of available viewer types, from which the user selects one.

Behind the scenes, each viewer type corresponds to a Report

Builder ActiveX server and a viewer component of the Reporter

application on the client.

A many-to-many relationship

exists between the fields and the filters and between the

reports and the viewer types. The MTM tables in Figure

1 are the correlative links for

these relationships.

Building the tables on SQL

Server 6.5 is a straightforward process using the Manage

Tables component of the Microsoft SQL Enterprise Manager,

which provides an interface for defining fields (datatypes,

lengths, and so on), creating indexes (primary, clustered,

and so on), and granting permissions (at the user/group or

object levels). The Generate SQL Scripts applet of the

Enterprise Manager extracts the data definition language

(DDL) so that it can be hand-modified and re-executed when

necessary—for example, to delete a column during

development or to execute some other functionality that is

not directly provided by the Manage Tables interface. The DDL

for the fields table is shown in Figure 2.

Figure 2 DLL

of Fields Table

/*

Microsoft SQL Server - Scripting */

/*

Server: MSI_NJ_SERVER */

/*

Database: RequestBroker */

/*

Creation Date 11/3/96 4:39:03 PM */

GRANT

CREATE TABLE TO public

GO

GRANT

CREATE TABLE TO public

GO

/***

Object: User RequestBroker Script Date: 11/3/96 4:39:04 PM ***/

if

not exists (select * from sysusers where name = 'RequestBroker'

and uid <

16382)

EXEC

sp_adduser 'RequestBroker', 'RequestBroker', 'public'

GO

/***

Object: Table dbo.t_Fields Script Date: 11/3/96 4:39:04 PM ***/

if

exists (select * from sysobjects where id =

object_id(‘dbo.t_Fields’) and

sysstat

& 0xf = 3)

drop

table dbo.t_Fields

GO

/***

Object: Table dbo.t_Fields Script Date: 11/3/96 4:39:04 PM ***/

CREATE

TABLE dbo.t_Fields (

ID

int NULL ,

Report_ID

int NULL ,

Name

varchar (255) NULL ,

DataType

smallint NULL ,

Description

varchar (255) NULL ,

Size

smallint NULL ,

Value

varchar (255) NULL ,

SelectedFilter

smallint NULL

)

GO

CREATE

UNIQUE CLUSTERED INDEX ID ON dbo.t_Fields(ID)

GO

GRANT

SELECT ON t_Fields TO public

GO

The

Application in Action

When the user clicks the

Request Wizard button in the Reporter executable, it invokes

the Request Broker’s GetReportsList method (shown in Figure

3), which goes out to the SQL Server and looks in the

reports table for the list of available reports.

Figure 3 GetReportsList

Public

Sub GetReportsList(asReportList() As String)

'PART

II

'Has

been changed from a Test Harness/Stub for Reporter

'

to develop against.

'

Now draws data from Data Cache to build message structure.

Dim

oResultset As rdoResultset

Set

oResultset = oConnection.OpenResultset("Select * from _

t_Reports",

rdOpenKeyset, rdConcurReadOnly)

oResultset.MoveLast

ReDim

asReportList(1, oResultset.RowCount) As String

oResultset.MoveFirst

Dim

iRowCount As Integer

Do

While Not oResultset.EOF

asReportList(0,

iRowCount) = oResultset.rdoColumns("Name")

asReportList(1,

iRowCount) = _

oResultset.rdoColumns("Description")

iRowCount

= iRowCount + 1

oResultset.MoveNext

Loop

Set

oResultset = Nothing

End

Sub

The user selects a report from

the Pick a Report listbox of the wizard, which fires the

click event code shown in Figure 4. The wizard then

populates the Pick a Viewer Type listbox by calling the

GetReportDescription method of the Re-quest Broker (see Figure

5). The name of the selected report and an empty message

structure are passed in to GetReportDescription.

Figure 4 1stReportList

Private

Sub lstReportList_Click()

'PART

II

'Populate

the ViewerType list on report selection

sbMain.Panels.Item(1).Text

= asReportsList _

(1,

lstReportList.ListIndex)

lstViewerType.Clear

Set

oMessage = Nothing

oRequestBroker.GetReportDescription

lstReportList.Text, oMessage

Dim

iLoop As Integer

For

iLoop = 1 To oMessage.ViewerTypes.Count

lstViewerType.AddItem

oMessage.ViewerTypes.Item(iLoop).Name

Next

iLoop

End

Sub

The message structure consists

of three parts: header information, fields information, and

viewer type information. The addition of the viewer type

object doesn’t break the original code from the first

article; it does change the type library, which means that

all the components of the reporting system need to be

recompiled, since they all contain references to the type

library for early binding.

The header contains the name

of the report that’s passed in to GetReportDescription.

In addition, the header generates a unique ID that will let

me store and identify these message structures on a

collection queue if the application is changed in the future

to execute asynchronously.

The header and viewer type

objects are relatively sparse at present, but they are built

as objects in order to leave room for expanding properties

and methods as the application develops in the future.

Passing them around as variables or arrays would limit my

ability to expand their richness without breaking existing

code.

The fields information,

previously populated with dummy data, is now populated with

data from the SQL Server 6.5 data cache. The Select statement

shown in the Load Field Information section of Figure 5

is used for generating the All Employees report. This is

where I alias the fields to produce more user-friendly column

headers when I get the data back.

Figure 5 GetReportDescription

Public

Sub GetReportDescription(sName As String, oMessage As _

ReportBuildMessage)

'PART

II

'Report-building

message structure is built here by using the passed-in

'

report name to query the database

'Load

Header Information ====================

oMessage.Header.ReportName

= sName

oMessage.Header.ReportID

= sUniqueName

'Load

Field Information =======================

Dim

oResultset As rdoResultset

Dim

sSQL As String

sSQL

= "select t_Reports.Description as ReportDescription, _

t_Fields.Name

as FieldsName, t_Fields.DataType, " & _

"t_Fields.Description

as FieldsDescription, t_Fields.Size, _

t_Fields.Value,

" & _

"t_Fields.SelectedFilter,

t_Filters_Selected.Name as _

FiltersSelectedName,

t_Filters.Name as FiltersName, " & _

"t_Filters.Description

as FiltersDescription from t_Fields, _

t_Fields_MTM_Filters,

t_Filters, " & _

"t_Filters

t_Filters_Selected, t_Reports where t_Reports.Name = '" _

&

sName & "' and " & _

"t_Fields.Report_ID

= t_Reports.ID and " & _

"t_Fields.ID

= t_Fields_MTM_Filters.Field_ID and " & _

"t_Fields_MTM_Filters.Filter_ID

= t_Filters.ID and " & _

"t_Fields.SelectedFilter

= t_Filters_Selected.ID order by _

t_Reports.ID,

t_Fields.ID, t_Filters.ID"

Set

oResultset = oConnection.OpenResultset(sSQL, rdOpenKeyset, _

rdConcurReadOnly)

Dim

sCurrentFieldName As String

Dim

sCurrentFilterName As String

'Do

While there are records

Do

While Not oResultset.EOF

'Set

Current name

sCurrentFieldName

= oResultset.rdoColumns("FieldsName")

'Create

a Field object

oMessage.Fields.Create

sCurrentFieldName

'Set

Field properties

With

oMessage.Fields.Item(sCurrentFieldName)

'Cast

rdo data types

.Name

= sCurrentFieldName

.DataType

= vFixRDOData(oResultset.rdoColumns("DataType"))

.Description

= vFixRDOData _

(oResultset.rdoColumns("FieldsDescription"))

.Size

= vFixRDOData(oResultset.rdoColumns("Size"))

.Value

= vFixRDOData(oResultset.rdoColumns("Value"))

.SelectedFilter

= vFixRDOData _

(oResultset.rdoColumns("FiltersSelectedName"))

End

With

'Do

While We are on the same Field and have records

Do

While Not oResultset.EOF

If

sCurrentFieldName <> oResultset.rdoColumns _

("FieldsName")

Then Exit Do

'Set

current filter name

sCurrentFilterName

= oResultset.rdoColumns("FiltersName")

'Create

the filter object and set its properties

With

oMessage.Fields.Item(sCurrentFieldName).Filters

.Create

sCurrentFilterName

With

.Item(sCurrentFilterName)

.Name

= vFixRDOData _

(oResultset.rdoColumns("FiltersName"))

.Description

= vFixRDOData _

(oResultset.rdoColumns("FiltersDescription"))

End

With

End

With

'Go

to the next record

oResultset.MoveNext

Loop

Loop

'Load

Viewer Information ======================

sSQL

= "Select t_ViewerTypes.Name from t_Reports, _

t_Reports_MTM_ViewerTypes,

t_ViewerTypes where " & _

"t_Reports.Name

= '" & sName & "' and " & _

"t_Reports.ID

= t_Reports_MTM_ViewerTypes.Report_ID and " & _

"t_Reports_MTM_ViewerTypes.ViewerType_ID

= t_ViewerTypes.ID " & _

"order

by t_ViewerTypes.Name"

Set

oResultset = oConnection.OpenResultset(sSQL, rdOpenKeyset, _

rdConcurReadOnly)

Do

While Not oResultset.EOF

oMessage.ViewerTypes.Create

(oResultset.rdoColumns("Name"))

oMessage.ViewerTypes.Item((oResultset.rdoColumns("Name")))

_

.Name

= (oResultset.rdoColumns("Name"))

oResultset.MoveNext

Loop

Set

oResultset = Nothing

End

Sub

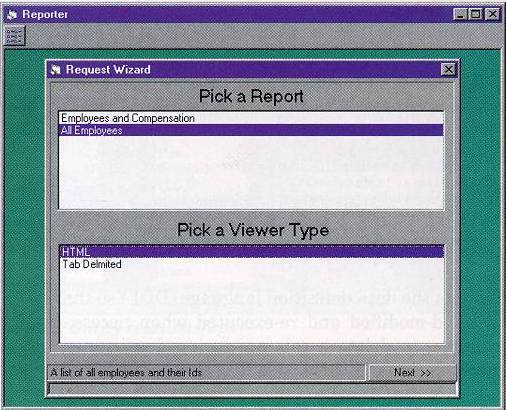

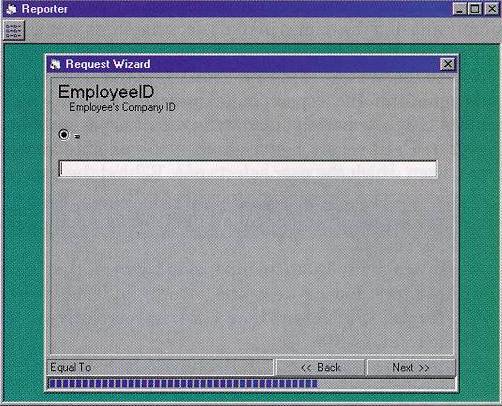

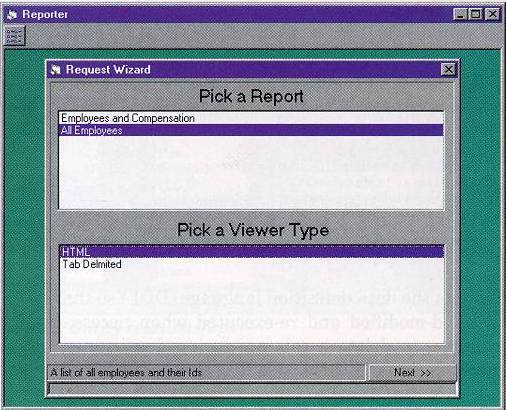

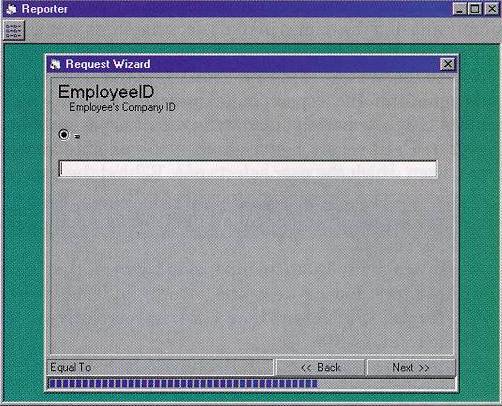

At this point, the user

selects a viewer type to continue with the wizard, which

looks like Figure 6. The field information is then

entered as the filter values in the continuing pages of the

wizard (see Figure 7). After the user clicks the

Finished button, the code in Figure 8

executes, which loads a ReportRunning form that is displayed

while the data is fetched and processed into the report.

Figure

6 Request Wizard reports and viewers

Figure

7 Entering more info in the Request Wizard

The Request Broker now needs

to hand off the report build information to a virtual data

object to fetch the desired data. To get the name of the

virtual data object, the SubmitReportRequest method of the

Request Broker (shown in Figure 9) does a join between

the reports table and the virtual data objects table using

the report name passed in the message. This gives me the name

of the object I want to create as the virtual data object.

Figure 8 Finished

'Finished

'PART

II

If

pbMain.Value = pbMain.Max Then

'Hide

the Wizard

Me.Hide

'Load

the ReportRunning form, configure,

'

center, then show it

Load

frmReportRunning

CenterChild

frmReporter, frmReportRunning

frmReportRunning.Message

oMessage.Header.ReportName

frmReportRunning.Show

DoEvents

oReportDone.ViewerType

= oMessage.Header.ReportType

oReportDone.ReportName

= oMessage.Header.ReportName

'Submit

the request

oRequestBroker.SubmitReportRequest

oMessage, oReportDone

'Being

synchronous, when we hit this point, the report

'

is done

Unload

frmReportRunning

Unload

Me

'Call

the routine that will look at the oReportDone

'

message and display the report

ProcessResults

oReportDone

Exit

Sub

End

If

I’ve written a relatively

simple virtual data object called EmployeeFinancial that

provides a complete illustration of the flow process of the

Reporting System API as it interacts with the other objects

in the loop. EmployeeFinancial analyzes the messages it

receives from the Request Broker, puts together a SQL

statement, retrieves the data from an ODBC datasource, and

caches the resultset in the SQL Server 6.5 data cache, again

using ODBC. It then tells the Request Broker where the

resultset is, hiding all of the steps necessary to get the

data from all other components but itself.

Figure 9 SubmitReportRequest

Public

Sub SubmitReportRequest(oMessage As ReportBuildMessage, _

oReportDone

As ReportDone)

'PART

II

'It

is here that we Handle a requested Report

Dim

oResultset As rdoResultset

Dim

sSQL As String

Dim

oVDO As Object

Select

Case oMessage.Header.ReportType

'In

places like this we should be using the IDs but I've

'

elected to use the string names everywhere to make it

'

easier to follow and understand

Case

"Excel"

'To

be added

Case

"HTML"

sSQL

= "Select t_VDOs.* from t_Reports, t_VDOs where _

t_Reports.Name

= '" & _

oMessage.Header.ReportName

& "' and t_VDOs.ID = _

t_Reports.VDO_ID

"

Set

oResultset = oConnection.OpenResultset(sSQL, _

rdOpenKeyset,

rdConcurReadOnly)

Set

oVDO = CreateObject(oResultset.rdoColumns("Name"))

Set

oResultset = Nothing

oVDO.BuildResultset

oMessage, oReportDone

If

oReportDone.ReportError = "" Then

'Process

Resultset into an HTML Report

Set

foBuilder = CreateObject("ReportBuilder" & _

oReportDone.ViewerType

& ".ReportBuilder")

foBuilder.Build

oReportDone

Set

oVDO = Nothing

End

If

Case

"Tab Delimited"

'To

be added

End

Select

'Transfer

the type

oReportDone.ViewerType

= oMessage.Header.ReportType

End

Sub

From an architectural

standpoint, the SQL-generating code belongs to the virtual

data objects and not the Request Broker, since virtual data

objects are the only ones that know what they have to do to

build the finished resultset. If, in the future, a group of

virtual data objects is developed that all login to the same

set of datasources and provide similar functionality, I could

migrate out some of the shared functionality into a common

module that’s used by those objects. But this

functionality should remain decoupled from the Request Broker

because a virtual data object might have to do some pretty

complex things to get the data, such as: running macros that

insert new operators into the messages as they convert

user-friendly options from the wizard interface into

meaningful SQL; translating the SQL for different target

database engines; and performing complex joins across

multiple datasources. Only the individual virtual data object

will understand the business rules required to fulfill the

request.

After the Request

Broker’s SubmitReportRequest method identifies and

creates the virtual data object, it calls the BuildResultset

method of the virtual data object (see Figure 10),

passing it the message object with all of the stuff that it

has accumulated so far, plus the ReportDone object, which is

the message I use for conveying information about the

completion process of the report. This replaces the callback

function used in the first part of this series. (As a plot

complication, the callback function turns out to be more

overhead than necessary since I’ve committed to

synchronous execution. I’ll go over this more thoroughly

in the Pool Manager section.)

Figure 10 BuildResultset

Public

Sub BuildResultset(oMessage As ReportBuildMessage, _

oReportDone

As ReportDone)

Dim

oResultset As rdoResultset

Set

oResultset = oConnectionSource.OpenResultset _

(GenerateSQL(oMessage),

rdOpenKeyset, rdConcurReadOnly)

oReportDone.Report

= CacheData(oResultset)

End

Sub

When the virtual data object

is initialized (see Figure 11), it does what’s

required to make contact with the ODBC datasource names

(DSNs) that it’s going to work with. In my

EmployeeFinancial example, two separate DSNs are

preconfigured on the business server machine for the virtual

data object to use, one for the source data and another for

the target database in the data cache. These two DSNs use

different user IDs and passwords to connect to different

databases that both, for my example, reside in the local SQL

Server 6.5.

Figure 11 Class

Initialize

Private

Sub Class_Initialize()

Set

oEnvironmentVDO = rdoEngine.rdoCreateEnvironment _

("VDO

Employee Financial", "VDOEmployeeFinancial", _

"vdoemployeefinancial")

Set

oConnectionSource = oEnvironmentVDO.OpenConnection _

("EmployeeFinancial",

rdDriverNoPrompt, False, "")

Set

oEnvironmentCache = rdoEngine.rdoCreateEnvironment _

("VDO

Cache", "VDOCache", "vdocache")

Set

oConnectionCache = oEnvironmentCache.OpenConnection _

("VDO",

rdDriverNoPrompt, False, "")

End

Sub

The BuildResultset method of

the virtual data object then calls the GenerateSQL function

shown in Figure 12. GenerateSQL takes the message

object, walks the fields, pulls out the information the user

has supplied, and builds the SQL statement from it. If the

field value is nothing, then the user didn’t input any

data, so it’s ignored. In this relatively simple

example, the Where clause is built from the field name, the

selected filter, the field value, and an And operator.

I’m using the real field

names and filter names in the message object to make

constructing the SQL statement a clear process. As I

mentioned earlier with respect to UI names, I might want to

build in support at the table level for translating the real

names into something easier for users to digest when

populating the messages to build the wizard. These

user-friendly names would then be replaced with the actual

database field names here in GenerateSQL.

Figure 12 GenerateSQL

Private

Function GenerateSQL(oMessage As ReportBuildMessage) As String

Dim

sSQL As String

Dim

iLoop As Integer

Dim

oField As Object

For

iLoop = 1 To oMessage.Fields.Count

Set

oField = oMessage.Fields.Item(iLoop)

If

oField.Value <> "" Then

Select

Case oField.DataType

Case

1

sSQL

= sSQL & oField.Name & " " & _

oField.SelectedFilter

& " '" & _

oField.Value

& "' and "

Case

2, 3

sSQL

= sSQL & oField.Name & " " & _

oField.SelectedFilter

& " " & oField.Value & " and "

End

Select

End

If

Next

iLoop

'Remove

last and

If

iLoop > 0 Then

sSQL

= Left(sSQL, Len(sSQL) - 4)

End

If

#If

ACCESS_DB Then

sSQL

= "Select * from t_Employees where " & _

sSQL

& " order by LastName, FirstName"

#Else

sSQL

= "Select * from dbo.t_Employees where " & _

sSQL

& " order by LastName, FirstName"

#End

If

GenerateSQL

= sSQL

End

Function

The GenerateSQL function

includes syntax for conditionally compiling the final SQL

statement, depending on whether the database engine used is

Microsoft SQL Server 6.5 or Microsoft Access. I included this

feature because I figured that most people out in MSJ-land

have a copy of Microsoft Access hanging around, but might not

have a copy of SQL Server available for testing the reporting

system. Accordingly, the project files include an .mdb with

the tables and data necessary to run the application. Using

the Upsizing Wizard, this .mdb can be scaled up to SQL Server

or simply exported, if desired.

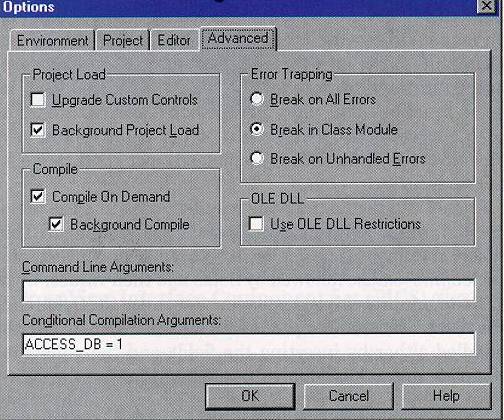

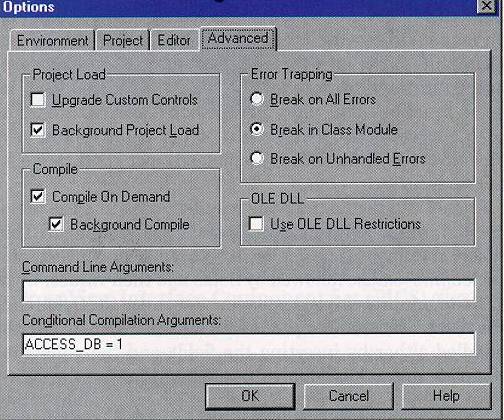

It’s worth mentioning

that Visual Basic compiler constants declared in code cannot

be scoped beyond the module in which they are declared. The

only way to define a compiler constant at the project level

is through the Options dialog from the Tools menu (see Figure

13).

Figure

13 Conditional compilation

The virtual data object’s

last job is to cache the data that it retrieves. The

resultset is passed to the CacheData function, shown in Figure

14. CacheData calls CreateCacheTable, which is shown in Figure

15, to create the table in which the data will be stored.

Figure 14 CacheData

Private

Function CacheData(oResultset As rdoResultset) As String

'If

we were always in the same datacache we could just do a

'

select into the new table. Since we don't want that limitation

'

we will pump the data

Dim

sTableName As String

Dim

sInsertStatic As String

Dim

sInsertDynamic As String

Dim

iLoop As Integer

sTableName

= CreateCacheTable

sInsertStatic

= "Insert into " & sTableName & " ("

For

iLoop = 0 To oResultset.rdoColumns.Count - 1

sInsertStatic

= sInsertStatic & _

oResultset.rdoColumns.Item(iLoop).Name

& ","

Next

iLoop

sInsertStatic

= Left(sInsertStatic, Len(sInsertStatic) - 1) & _

")

Values ("

Do

While Not oResultset.EOF

sInsertDynamic

= ""

For

iLoop = 0 To oResultset.rdoColumns.Count - 1

Select

Case oResultset.rdoColumns.Item(iLoop).Type

Case

rdTypeCHAR, rdTypeDATE, rdTypeTIME, _

rdTypeTIMESTAMP,

rdTypeVARCHAR

If

IsNull(oResultset.rdoColumns.Item(iLoop).Value) _

Then

sInsertDynamic

= sInsertDynamic & "Null,"

Else

sInsertDynamic

= sInsertDynamic & "'" & _

oResultset.rdoColumns.Item(iLoop).Value

& "',"

End

If

Case

Else

If

IsNull(oResultset.rdoColumns.Item(iLoop).Value) _

Then

sInsertDynamic

= sInsertDynamic & "Null,"

Else

sInsertDynamic

= sInsertDynamic & _

oResultset.rdoColumns.Item(iLoop).Value

& ","

End

If

End

Select

Next

iLoop

sInsertDynamic

= Left(sInsertDynamic, Len(sInsertDynamic) - 1) _

&

")"

oConnectionCache.Execute

sInsertStatic & sInsertDynamic

oResultset.MoveNext

Loop

fsTableName

= sTableName

CacheData

= sTableName

End

Function

Figure 15 CreateCacheTable

Private

Function CreateCacheTable() As String

Dim

sTableName As String

Dim

sSQL As String

#If

ACCESS_DB Then

sTableName

= "t_" & sUniqueName

sSQL

= "CREATE TABLE " & sTableName & " ("

& _

"EmployeeID

int ," & _

"LastName

varchar (20) ," & _

"FirstName

varchar (10) ," & _

"Title

varchar (30) ," & _

"TitleOfCourtesy

varchar (25) ," & _

"BirthDate

datetime ," & _

"HireDate

datetime ," & _

"Address

varchar (60) ,"

sSQL

= sSQL & "City varchar (15) ," & _

"Region

varchar (15) ," & _

"PostalCode

varchar (10) ," & _

"Country

varchar (15) ," & _

"HomePhone

varchar (24) ," & _

"Extension

varchar (4) ," & _

"ReportsTo

int ,"

sSQL

= sSQL & "Salary money ," & _

"Bonus

money )"

#Else

sTableName

= oEnvironmentCache.UserName & ".t_" &

sUniqueName

sSQL

= "CREATE TABLE " & sTableName & " ("

& _

"EmployeeID

int NULL ," & _

"LastName

varchar (20) NULL ," & _

"FirstName

varchar (10) NULL ," & _

"Title

varchar (30) NULL ," & _

"TitleOfCourtesy

varchar (25) NULL ," & _

"BirthDate

datetime NULL ," & _

"HireDate

datetime NULL ," & _

"Address

varchar (60) NULL ,"

sSQL

= sSQL & "City varchar (15) NULL ," & _

"Region

varchar (15) NULL ," & _

"PostalCode

varchar (10) NULL ," & _

"Country

varchar (15) NULL ," & _

"HomePhone

varchar (24) NULL ," & _

"Extension

varchar (4) NULL ," & _

"ReportsTo

int NULL ,"

sSQL

= sSQL & "Salary money NULL ," & _

"Bonus

money NULL)"

#End

If

oConnectionCache.Execute

sSQL

#If

ACCESS_DB Then

#Else

sSQL

= "GRANT REFERENCES , SELECT , INSERT , DELETE , _

UPDATE

ON " & sTableName & " TO public"

oConnectionCache.Execute

sSQL

#End

If

CreateCacheTable

= sTableName

End

Function

CreateCacheTable first creates

a table name by calling the UniqueName function (see Figure

16), which brings me to that familiar problem of having

to create persistent storage for objects using a unique name,

without any help from the user. Inevitably, this leads to

producing some type of unique name generator. I can’t

use the Win32® GetTempFilename API for two reasons. First,

the API returns a file name that could be longer than 30

characters, which SQL Server 6.5 will not like for a table

name. Secondly, the file name returned has a TMP file name

extension that I would have to change to HTM, which would no

longer guarantee my having a unique file name. In Visual

Basic, I’ve found that a combination of the day, the

month number, the year, and the number of seconds since

midnight provides a lot of uniqueness protection. As an extra

measure, I’ve cast the timer function as a double, which

also gives me the decimal number of seconds since midnight.

Server time is used, so it doesn’t matter what time the

client workstations think it is.

Figure 16 UniqueName

Private

Function sUniqueName() As String

'PART

II

'There

are more precise ways to generate unique names. GUIDs could even

'be

used. This is good enough for our needs. We might add a unique

'process

ID for the Request Broker using this to make the name more

'unique.

Dim

sHold As String

Dim

iPos As Integer

sHold

= Trim(Str(Format(Date, "ddmmyyyy"))) &

Trim(Str(CDbl(Timer)))

'Replace

any . with _

iPos

= InStr(1, sHold, ".")

sUniqueName

= Left(sHold, iPos - 1) & "_" & _

Right(sHold,

Len(sHold) - iPos)

End

Function

This is more than adequate to

keep a single process from stepping on its own tables stored

temporarily (for the life of the process) in the cache. But

with a high volume of virtual data objects running, there is

a chance that a collision might occur in the shared cache.

There are a few ways to handle this.

I could handle it like

Ethernet and let the failed process try again until it

succeeds at creating a unique name. Or I could generate a

unique ID for each process itself at the Request Broker

level, or even at the virtual data object and Report Builder

levels, and append this ID to the date-and-timer string. For

a really good time, I could call UUIDGEN.EXE or GUIDGEN.EXE

from the OLE2 ToolKit to create a Universally Unique

Identifier or, as it’s known in COM parlance, a Globally

Unique Identifier (GUID). GUIDs are 128 bits, which works out

to 32 hexadecimal characters. NTFS and Microsoft Access have

no problems with this. However, SQL Server 6.5 currently has

a 30-character limit on table names, so this option would

require a little tweaking.

After getting a table name

back from the UniqueName function, CreateCacheTable proceeds

to create the table (see Figure 15).

Again, I’ve provided the code to do this for both SQL

Server 6.5 and Microsoft Access, depending on your

environment. In this relatively simple example, the basic

differences are that SQL server tables are refer-enced by

their owners—which is not required, but is good

practice—and that permissions are granted for SQL Server

tables. This would be the point to add any amount of

additional security complexity using SQL Server’s native

security.

CacheData then pumps the

resultset data into the table created in the data cache using

an Insert statement. The Insert statement is basically

structured as an "Insert into tablename" clause

followed by fields and then values, with a one-to-one ordinal

correspondence between the fields and the values. This second

part of the insert statement can be handled either by

inserting the fields and the values in complete ordinal

assembly (paralleling the way the database columns were

created) or by mapping the values to the fields, with

separate sections of the Insert statement for the fields and

the values. This second method is more flexible, particularly

since the ordinal position of the fields will remain constant

during the data pumping process.

My resulting Insert statement

looks like

Insert into tablename

(fieldname, fieldname, …) values (value, value, …)

Everything up to the actual

values remains unchanged during the insert process, so that

information is put together as a static string to which the

values are appended for each row. As the resultset is

scrolled through one row at a time during the caching

process, the values are evaluated to determine if they are

null or if they are of a datatype that must be delimited with

single quotes (such as characters, timestamps, and so on). At

present, the example code is not equipped to handle BLOBS

(binary large objects), memo fields, or datatypes of similar

nature; support could be added, if required.

Once the data is completely

inserted into the data cache, control returns to the Request

Broker and the virtual data object is set to Nothing. This

brings me back to the Case "HTML" section of the

SubmitReportRequest method of the Request Broker shown in Figure

9, barring any errors (which I’m not trapping for in

this example because everything always works like a Swiss

watch in the textbook—right?). The next step is to

create the Report Builder object and pass it the Report Done

object that contains the name of the table with the stored

results in the data cache.

By design, the only interface

point for a Report Builder is its Build method, which is

passed the Report Done object. This Build method (see Figure

17), will be the same for all Report Builders. Using a

Select statement, it extracts the data cached by the virtual

data object in the local SQL Server 6.5, creating a

resultset. It then passes this resultset to the BuildReport

method, which manipulates the resultset to produce the report

in the desired format.

Figure 17 Build

Public

Sub Build(oReportDone As ReportDone)

Dim

oResultset As rdoResultset

Dim

sSQL As String

fsTableName

= oReportDone.Report

sSQL

= "Select * from " & oReportDone.Report

Set

oResultset = oConnectionSource.OpenResultset _

(sSQL,

rdOpenKeyset, rdConcurReadOnly)

BuildReport

oResultset, oReportDone

'Not

only required because it is a good habit, but

'

when using Access as the DataCache it will lock the table

'

and not allow us to drop it because the resultset is placed

'

on the rdoResultsets collection of the rdoConnection

'

object.

oResultset.Close

End

Sub

Figure 18 BuildReport

Private

Sub BuildReport(oResultset As rdoResultset, _

oReportDone

As ReportDone)

Dim

iFileHandle As Integer

Dim

sOutput As String

Dim

iLoop As Integer

Dim

iColumns As Integer

'If

we compile locally then place the resultant HTM file locally

#If

ACCESS_DB Then

fsFileName

= "C:\HTM\" & sStripOwner(oReportDone.Report) &

".htm"

#Else

'If we are on the server put it in the shared directory

fsFileName

= "W:\" & sStripOwner(oReportDone.Report) &

".htm"

#End

If

iFileHandle

= FreeFile

'Open/Create

the output file

Open

fsFileName For Output Access Write Lock Write As iFileHandle

'Start

document and table

Print

#iFileHandle, "<HTML> <TITLE> </TITLE>

<BODY> _

<TABLE

border> <TR>"

'Set

the number of columns

Print

#iFileHandle, "<TH colspan=" & _

oResultset.rdoColumns.Count

& ">"

'Set

the report name

Print

#iFileHandle, "<H3><A

NAME=""TT"">" & _

oReportDone.ReportName

& "</a></H3> </TH> </TR>

<TR>"

iColumns

= oResultset.rdoColumns.Count - 1

'Put

the column headers in

For

iLoop = 0 To iColumns

sOutput

= sOutput & "<TH>" & _

oResultset.rdoColumns.Item(iLoop).Name

& "</TH>"

Next

iLoop

'Finish

the headers

sOutput

= sOutput & "</TR>"

Print

#iFileHandle, sOutput

'Populate

the cells

Do

While Not oResultset.EOF

'Start

Row

sOutput

= "<TR>"

'Do

the cells

For

iLoop = 0 To iColumns

sOutput

= sOutput & "<TD>" & _

oResultset.rdoColumns.Item(iLoop).Value

& "</TD>"

Next

iLoop

'Finish

Row

sOutput

= sOutput & "</TR>"

Print

#iFileHandle, sOutput

'Next

Row

oResultset.MoveNext

Loop

'Finish

the document/report

Print

#iFileHandle,

"</TABLE></BODY></HTML>"

Close

iFileHandle

'Depending

on whether we are without or with server

'

set the URL for the report

#If

ACCESS_DB Then

oReportDone.Report

= "C|/HTM/" & sStripOwner _

(oReportDone.Report)

& ".htm"

#Else

oReportDone.Report

= "http://192.168.0.1/vdo/" & _

sStripOwner(oReportDone.Report)

& ".htm"

#End

If

End

Sub

The BuildReport method for my

example (see Figure 18) converts the resultset into an

HTML table that it then inserts into a file. The first step

is to create the file, which needs to be in a directory

that’s shared on the network as HTM, where both the

Report Builder and Microsoft Internet Information Server

(IIS) can get at it since they won’t necessarily be

running on the same machine. For my example, this shared

directory is mapped to drive letter W for the Report Builder,

and it’s mapped to drive letter X for IIS. Within IIS

this directory is aliased VDO, as Figure 19

illustrates. Figure 19 also indicates a default

document named default.htm for drive letter X that will be

displayed if there’s a failure somewhere along the way

and the user-service viewer tries to get a document that IIS

can’t find. The file default.htm will simply display

"Report Not Found."

Figure

19 WWW server directories

BuildReport then constructs a

basic HTML table with a header, column headers, and the data.

Stepping through the resultset one row at a time, it

encapsulates the data as HTML and shoves it into the file.

BuildReport then closes the file and sets the Report property

of the ReportDone object to the location of the file, which

is a URL composed of the location of IIS, the directory as it

knows it (VDO, in this case), and the file name. A desktop

version that runs without IIS stores the HTML-based table in

a file on the local C drive (see Figure 18).

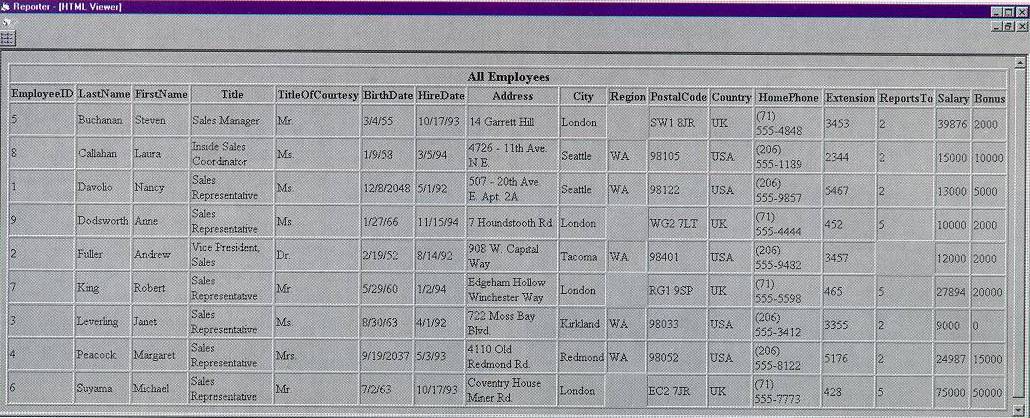

The Report Done object is

passed to the Request Broker, which serves it up hot to the

Reporter client, bringing me back to where ProcessResults is

called in the Reporter in Figure 8. The present

version of ProcessResults handles only HTML reports, which it

accomplishes by passing the URL to the viewer, which displays

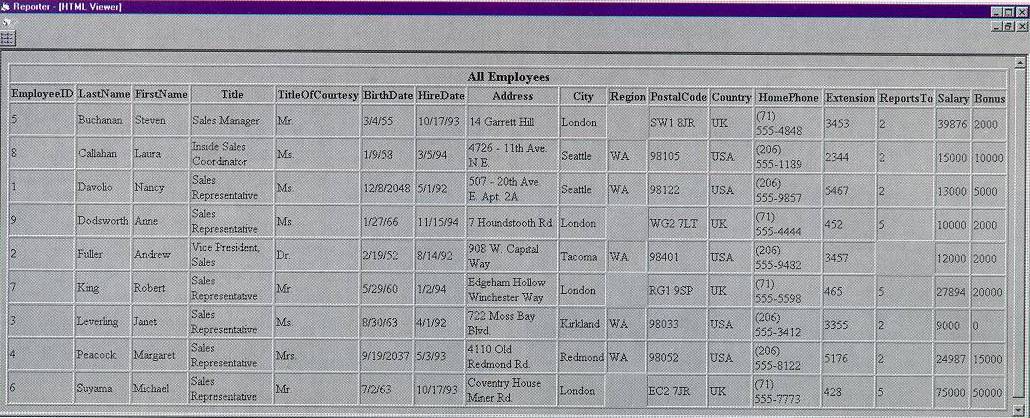

the finished report (see Figure 20).

Figure

20 HTML Viewer

The HTML viewer literally

snaps together. With Internet Explorer 3.0 and the Microsoft

Internet Controls installed, a reference to the Internet

Controls is added to the Reporter project. A Web Browser

ActiveX control is then added to a child form, called

frmViewerHTML in my example, that is fed the URL on one line

of code.

Not content to have things

that easy, I added a small routine called NewShow (see Figure

21). Due to timing and the way screens are repainted, the

Web browser object doesn’t smoothly resize to fit the

screen and impress the user in the Load event, so I shoved

the code into the NewShow method, which resizes the object

within the form.

Figure 21 NewShow

Public

Sub NewShow(sURL As String)

Load

Me

CenterChild

frmReporter, Me

wbMain.Navigate

sURL

DoEvents

Me.WindowState

= 2

wbMain.Top

= 0

wbMain.Left

= 0

Resize

DoEvents

Me.Show

End

Sub

Closing

the Application

When the user is done viewing

the report and has closed the viewer window, the unload event

fires and calls the Request Broker DestroyReport method. In

the Request Broker, I dereference the Report Builder by

setting the object variable to Nothing. In the Report Builder

terminate event (see Figure 22) the report is

destroyed, the cache table is destroyed, and the Report

Builder dies. The Request Broker stays alive until the client

closes the Reporter and the application is completely shut

down.

Figure 22 Terminate

Private

Sub Class_Terminate()

DestroyReport

DestroyTable

Set

oEnvironment = Nothing

Set

oConnectionSource = Nothing

End

Sub

Private

Sub DestroyReport()

Dim

dStartTime As Double

dStartTime

= Timer

On

Error GoTo ERROR_DestroyReport

Kill

fsFileName

Exit

Sub

ERROR_DestroyReport:

'Apparently

it takes some time for IIS to release the file so we

'

keep trying until it works.

'

Otherwise let garbage collection get it.

Select

Case Timer - dStartTime

'30

Second timer

Case

Is > 30

'Midnight

exception handle

Case

Is < 0

'Try

again... maybe IIS has let it go

Case

Else

Resume

End

Select

Exit

Sub

End

Sub

Private

Sub DestroyTable()

oConnectionSource.Execute

"Drop table " & fsTableName

End

Sub

The Report Builder hangs

around this long so that in a future version of the reporting

system there’s a link to the resultset cached by the

virtual data object. This gives me room to give the user the

option of seeing the data in different layouts while

incurring the overhead of retrieving potentially massive

amounts of data from all over the network only once.

In Part I of this series, I

talked about the Report Builder depositing the finished

report into the SQL Server 6.5 cache, where it could be

retrieved by the Reporter for display in a viewer using ODBC.

While that’s not the case for the HTML example developed

in this article, the option remains open for other Report

Builders.

Security

My main application security

is enforced by ACLs. I’ll set all of the remote ActiveX

servers to the Allow Remote Creates by ACL setting in the

Remote Automation Connection Manager. The client enforces no

security. Users are either permissioned at the appropriate

level within the domain or not. The end result is that, if

someone doesn’t have clearance, he or she won’t be

able to create Request Brokers, virtual data objects, and so

on. Don Box’s article "Introducing Distributed COM

and the New OLE Features in Windows NT 4.0," (MSJ

May 1996) describes the mechanisms used to enforce this

security.

With this security model in

mind, my Visual Basic application will have to trap any

failures detected by the Automation Manager and return a

suitable error, informing the user that security clearance is

not adequate.

Further security granularity

is enforced by SQL Server logins and permissions. Data is

partitioned by login and is available to objects on a

need-to-know basis. The virtual data object uses a data cache

user ID and password that is shared with the Report Builder,

and the Request Broker has a separate ID and password that it

uses to get at its metadata in a separate database. The

advantage of this is that different groups of developers can

add virtual data objects and Report Builders to this system

without being privy to any security other than that of their

own objects.

The user must be properly

permissioned to create a virtual data object. The virtual

data object has its own user IDs and passwords for the remote

data sources, and these IDs and passwords are, by design,

limited to Select-only permissions. These objects can’t

be made to do anything other than what they already do, which

is read the information. This will help satisfy the Audit

department’s desire to remove all possibility of

unauthorized users accessing data, or authorized users

corrupting accessible data.

Letting Windows NT handle the

security at the domain level also allows me to take advantage

of options built into Windows NT domain security, including

centralized security management and the ability to implement

more stringent security requirements. For example, Winlogon

contains a DLL named GINA (graphical identification and

authentication) that can be modified to accommodate

smartcards, retinal scanners, or Borg implants at

authentication.

Execution

Strategies and Pool Management

When sketching out the

prototype architecture in the first article of this series, I

built a callback class to allow asynchronous execution of the

reporting system. But the complications involved with

queueing multiple virtual data objects and Report Builders

through the Request Broker are more than are necessary at

this point, so I’ve sidestepped the issue for the

moment. Visual Basic presently builds only single-threaded

ActiveX servers, and ActiveX serializes the requests to these

servers. Originally, the Request Broker was envisioned as a

solitary multiuse object on the remote server. With that

scenario, the Request Broker would have to deal with virtual

data objects and Report Brokers in a pool-management way. But

at this time, I’ve decided to bypass that complication

and avoid asynchronous processing, and thus pool management

as well. At a future point, both issues can be revisited.

In this article, the

application design has been simplified to be purely

synchronous, with all of the objects configured as Creatable,

Singleuse, with the public property still True. If necessary,

the users can run multiple copies of the application.

Given my application’s

design, the issue of implementing a pool manager becomes as

much a business decision as it is a technology decision. The

two primary benefits of a pool manager are improved

performance, since the users don’t have to wait for

objects to be created, and tighter security, since the pool

manager and its utilities create and own the ActiveX objects

instead of the users. When a pool manager becomes overloaded,

it must still turn away requests, or serialize them, which is

same situation that I’m in without a pool

manager—waiting until a new object is created or, if the

server’s run out of resources, waiting until an object

becomes free. You should carefully evaluate demand and

distribute users and objects to minimize overload situations.

In addition, you need to determine which virtual data objects

should be kept in the pool or pools, given the diversity of

these objects.

Optimization

In the old days of

conventional MS-DOS memory, ex-pensive RAM, and primitive

hardware, applications were optimized with multiple passes

spent squeezing every line of code. In today’s

environment of powerful operating systems, cheap RAM, fast

hardware, shorter lifecycles for application software

("disposacode"), and abstracted development tools

like MFC and DAO, optimizing is a different process.

Most of the optimization of an

enterprise application takes place at the architectural

design level. Two key parts of this process are determining

the optimal level of granularity for individual components

(where to draw the lines), and deciding where to position

these objects in order to get the best performance (run

locally, run on one remote server, or run across multiple

servers, depending on processing load and network latency).

The Application Performance Explorer

(http://www.microsoft.com/vbasic/download/vbape.htm), which

is written in Visual Basic, can be a great help in verifying

these design-and-deployment decisions that affect runtime

performance.

Once the components are

developed and the application is put together, the Visual

Basic Code Profiler (shipped with Visual Basic) does a good

job of helping you optimize the actual code by identifying

unused, dead code and resource-intensive routines that are

good targets for restructuring.

Visual Basic also provides

some time-saving shortcuts for invoking ActiveX methods or

setting and reading properties. For example, the With…End

With construct allows you to directly grab the reference to

the last component in an object.component.component.component

chain without having each intermediate component along the

chain handle the request as it goes down the hierarchy. For

example, in my project, the code in Figure 23 is used

to set a series of properties for a field object.

Figure 23 Setting

Properties

With

oMessage.Fields.Item(sCurrentFieldName)

'Cast

rdo data types

.Name

= sCurrentFieldName

.DataType

= vFixRDOData(oResultset.rdoColumns("DataType"))

.Description

= vFixRDOData (oResultset.rdoColumns _

("FieldsDescription"))

.Size

= vFixRDOData(oResultset.rdoColumns("Size"))

.Value

= vFixRDOData(oResultset.rdoColumns("Value"))

.SelectedFilter

= vFixRDOData(oResultset.rdoColumns _

("FiltersSelectedName"))

End

With

My reporting system

application, which runs synchronously on the client machine,

brings up another aspect of optimization: the subjective

perceptions of users. Since the application runs like a batch

job, it has a potentially long period of apparent inactivity

from the users’ point of view. While the SQL statement

is generated, the data is fetched from the remote servers,

and the final report is built, I run the risk of the user

thinking that the process is hung. With some applications,

this can result in unexpected problems if the user starts a

clicking fit or keyboard tantrum, trying to get some kind of

response out of the application. This won’t affect my

synchronously executing reporting system.

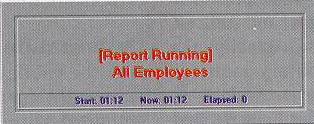

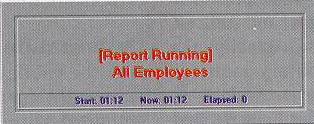

One good way to handle this

situation (in addition to trapping input from the user) is to

display a message that lets the user know that the job’s

still running (see Figure 24). It’s helpful to

have a clock that displays the system time or the elapsed

time, even if it’s updated only a few times a minute,

just to let the users know that everything’s fine and

that the application is still doing what they want it to do.

Since my users are running Windows NT, they can read their

email or look at comics or movie reviews on the Web while

reports are being processed, occasionally checking on the

progress of the application and getting some kind of

satisfactory feedback.

Figure

24 Report Running message

Microsoft

Access as a Sandbox

When used in passthrough query

mode, Microsoft Access provides an environment for isolated

testing of the huge-and-hairy SQL statements that are an

integral part of client/server development. This is

especially useful for components like the virtual data

objects, which need to speak in SQL to many different

datasources, such as Microsoft SQL Server, Sybase, Oracle,

and so on. Not all SQL is created equal; there are variations

in an optimized SQL statement that depend on the database

engine that will be executing it. When testing and

benchmarking SQL, it’s a lot easier if you don’t

have to deal with the rest of a developing Visual Basic

application at the same time.

Microsoft Access also provides

a separate ODBC connection string for each passthrough query

created, which lets you test the tuned SQL with the various

user IDs and passwords the virtual data objects will actually

use at runtime against the various databases they need to

hit.

I also like to use Microsoft

Access as a local backup of SQL Server development data,

which optimizes the development process by reducing the risk

of corrupting the living development data by using my own

local copy. For example, while designing the database that

supports the report build message objects, columns came and

went from the tables as I put the picture together. On SQL

Server, this means dropping and recreating the table, which

means that all of the data in the table ceases to exist.

Making up test data with referential integrity is not

something that you want to do more than once. If the tables

are imported into Microsoft Access, you can cut and paste the

data back into the SQL Server tables after they are

recreated.

While there are other methods

available to accomplish these tasks, Microsoft Access

provides all of these features in one handy package that you

probably already have on your desktop.

Internationalization

Successful applications

deployed in multinational corporations tend to get

distributed around the globe. There are several ways to

facilitate converting the application—at least the parts

of it that the user sees—into different languages. One

good method involves stripping all of the strings that are

displayed to the user out of the application code and storing

them in a single resource file. Icons and bitmaps, which

might also be swapped out for different locations, can be

stored in the resource file as well. In addition to getting

all of the changeable stuff in one basket, resource files

allow the application to selectively load bitmaps and strings

as needed on-the-fly, minimizing the performance hit on a

load event.

Microsoft Developer Studio

provides a good environment for editing resource files or

scripts. Saving the file from the Developer Studio produces a

file with an .rc extension, which includes instructions for

the resource compiler. Running the resource compiler that

ships with Microsoft Visual C++® on the .rc file produces

two more files, .res and .h. The .res file is the same for

Visual C++ or Visual Basic and is simply added to the

project. The .h file contains the constants used for locating

items in the .res file, and it needs to be converted into

something that Visual Basic can use.

I wrote a small standalone

Visual Basic project called Resource to do this conversion.

Resource runs at the command line and takes the path to the

.h file. It then creates a file called resource.bas in the

same directory as the .h file. The ConvertFile function from

the Resource project—essentially the heart of the

project—is shown in Figure 25.

Figure 25 ConvertFile

Function

Private

Function ConvertFile(sFileName As String) As Boolean

'The

work to convert the header file to a bas file takes place here

'Basically

trap any file or command line errors

On

Error GoTo ERROR_ConvertFile

'Set

successful completion to false

ConvertFile

= False

Dim

iInFile As Integer

Dim

iOutFile As Integer

Dim

sInString As String

Dim

sOutString As String

'Get

input file handle and open file

iInFile

= FreeFile

Open

sFileName For Input As iInFile

'Get

output file handle and open file

'Since

VB only allows one resource file we've chosen

'

RESOURCE.BAS as our result name

iOutFile

= FreeFile

Open

ExtractPath(sFileName) & "RESOURCE.BAS" For Output

As iOutFile

'Put

in the first line required by VB

Print

#iOutFile, "Attribute VB_Name =

""modResource"""

'Process

the whole file

Do

While Not EOF(iInFile)

'In

with a row

Input

#iInFile, sInString

'Looking

at a .h file generated by the Developer Studio Resource

'

editor shows that the first break in lines of text is where

'

we want to bail out

If

Left(sInString, 1) = "" Then

Exit

Do

'Else

we ignore comments

ElseIf

Left(sInString, 1) <> "/" Then

'If

it is not a comment then replace the #define with _

"Public

Const"

sOutString

= "Public Const" & Right(sInString, _

Len(sInString)

- Len("#define"))

'Put

an equal (=) sign between the const name and value

Dim

iLastSpace As Integer

iLastSpace

= FindLastSpace(sOutString)

sOutString

= Left(sOutString, iLastSpace) & "= " & _

Right(sOutString,

Len(sOutString) - iLastSpace)

'Print

result to file

Print

#iOutFile, sOutString

End

If

Loop

'Close

files

Close

iInFile

Close

iOutFile

'If

we made it this far we were successful

ConvertFile

= True

Exit

Function

As an example of how this

works, I stripped the strings out of the Resource project

itself. After feeding the .rc file through the resource

compiler, the resource.h file looks like Figure 26.

After running the Resource conversion program on resource.h,

the resulting resource.bas looks like Figure 27.

Figure 26 Resource.h

//{{NO_DEPENDENCIES}}

//

Microsoft Developer Studio generated include file.

//

Used by Resource.rc

//

#define

STR_HELP 1

#define

STR_FAIL_PART_1 2

#define

STR_FAIL_PART_2 3

#define

STR_SUCCESS_PART_1 4

#define

STR_SUCCESS_PART_2 5

#define

ICON_MAIN 102

//

Next default values for new objects

//

#ifdef

APSTUDIO_INVOKED

#ifndef

APSTUDIO_READONLY_SYMBOLS

#define

_APS_NEXT_RESOURCE_VALUE 103

#define

_APS_NEXT_COMMAND_VALUE 40001

#define

_APS_NEXT_CONTROL_VALUE 1000

#define

_APS_NEXT_SYMED_VALUE 101

#endif

#endif

Figure 27 Resource.bas

Attribute

VB_Name = "modResource"

Public

Const STR_HELP = 1

Public

Const STR_FAIL_PART_1 = 2

Public

Const STR_FAIL_PART_2 = 3

Public

Const STR_SUCCESS_PART_1 = 4

Public

Const STR_SUCCESS_PART_2 = 5

Public

Const ICON_MAIN = 102

The resources contained in the

.res file are actually rolled into the Visual Basic

executable at build. This means that creating

different-language versions of the application entails more

than simply swapping in different resource files at

deployment. Each language the application is released in will

require a separate pass through the resource compiler and a

separately-built executable. The drawbacks to this approach

are that it involves a fairly tedious (read "vulnerable

to error") process, and that it lacks any means of

centralized control. In addition to adding a layer of

complexity when adding enhancements or updates to the

application, this method introduces the risk of divergent

code paths.

As alternatives to standard

resource files, there are a couple of other options for

adding multilanguage support to my reporting system

application. Given that the application’s bitmaps and

icons won’t be changing, I can load all of the strings

into a central repository. Since the application is designed

to access a SQL Server 6.5 business service component at

runtime, a single string table out on the SQL Server, with a

two-field primary key composed of the string- and language

identifiers, plus a third field for the text of the string

itself, would be a good candidate for the string repository.

The strings would be loaded down from the server at startup.

As a faster option, the strings could be cached locally on

the client, in either an .mdb file or, for even better

performance, in a lightweight flatfile. Down the road, I can

consider building an ActiveX server component to dynamically

pass the strings to the application.

As another option, I can build

a mechanism to help Visual Basic have some of the flexibility

of C. C programs are able to dynamically read resource DLLs

during execution without recompiling. Changing the language

of the strings or the appearance of the bitmaps and icons

used by the application can be a simple matter of changing

the DLLs. For my 32-bit Visual Basic application I can

consider compiling the resource files, along with routines

for loading the strings and images, as in-process ActiveX

DLLs, which can be dynamically replaced. These ActiveX DLLs

would be like resource files on steroids.

Converting the strings,

bitmaps, and icons that an application uses doesn’t help

with error or status messages returned from the Visual Basic

or operating system runtime DLLs. Fortunately, there are

versions of Visual Basic and Windows NT in various languages,

so the application can be rebuilt and setup disks created in

another language environment from the ground up.

Summary

In the first part of this

series, I examined the architecture of the reporting system

application and outlined the API for all of its components.

In this article, I focused on

the implementation and locked down the Reporting System API.

On the user service front, I built a Web browser viewer that

displays report data through IIS. I rounded out the business

service Request Broker and expanded the messaging object that

carries data between all of the components. I developed a

complete, functioning virtual data object and an HTML Report

Builder. And I exploited the ease-of-use and flexibility of

the Microsoft SQL Server business service component to

support the application at just about every step, in-cluding

replacing stubbed info with real data from my friends at

Northwind. I also looked at design tradeoffs and options in

security, pool management, optimization, and

internationalization.

The Visual Basic components

that are discussed and built in this article are designed to

create an Employee Info report that’s displayed as an

HTML page. But that’s just an example; the real value of

this system is that the API will remain unchanged for any

report you wish to create by plugging in new components based

on the samples in this article. Give it a try!

In Part III, I’ll wrap up

the development phase and cover deployment issues such as QA

testing and handling maintenance issues and enhancement

requests for the application. And I’ll finally get to

talk about Visual Basic 5.0. Stay tuned.

To

obtain complete source code listings, see Editor's page.

This article is reproduced

from Microsoft Systems Journal. Copyright © 1997 by Miller

Freeman, Inc. All rights are reserved. No part of this

article may be reproduced in any fashion (except in brief

quotations used in critical articles and reviews) without the

prior consent of Miller Freeman.

To contact Miller

Freeman regarding subscription information, call (800)

666-1084 in the U.S. and Canada, or (303) 678-0439 in all

other countries. For other inquiries, call (415) 905-2200.